Auto-Piano Based On Audio Detection

A piano based on Raspberry Pi that can identify music and play it with the piano.

This project builds an auto-piano based on Raspberry 4bi and other peripherals, which can identify the external playing music and play it with the piano. The whole project can be divided into the software part and hardware part.

Software Part

In the software part, we base on the library in Python named librosa to process the audio signals. The whole process consists of the following four steps.

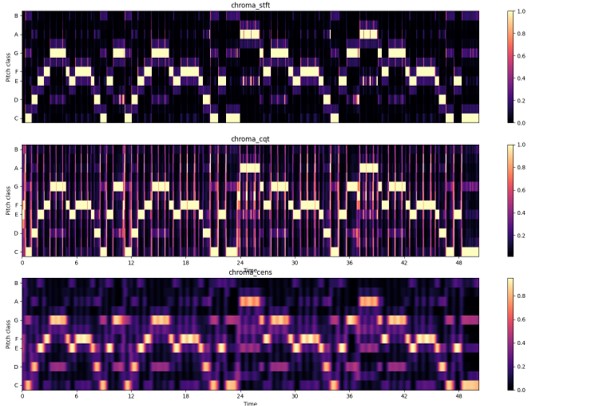

Spectral Observation

We first use the functions in librosa to extract the frequency, power and CQT(Constant Q-transform, a very useful transformation for the audio signal, see Wikipedia) spectral of the audio signal and observe them. Just look at the following picture.

Sampling and Pre-processing

We use the following code to get the fundamental frequency of each audio frame.

y, sr = librosa.load('./demo1.wav') # Read the .wav audio file and its sampling rate

x = np.abs(librosa.stft(y)) # Conduct short time Fourier transform of the audio

xx = librosa.power_to_db(x, ref=np.median) # Use the median to convert the power to decibel

f0 = librosa.yin(y, fmin=262, fmax=1967) # Use the Yin algorithm to estimate the fundamental frequency per frame.

Audio Processing

In practical playing and recorded music, there exists “Rest” between different notes, which brings much interference to the identification. To tackle this issue, we process the decibel matrix to get the mean decibel of each frequency component, thus we can set some thresholds to determine the note.

In the process, we use the librosa.hz_to_note() function to map the frequency to the note. We set a threshold of decibel to determine whether we receive an audio signal, and another threshold of time to determine whether to view the signal as a new note.

Output

We map the note information to a discrete number with the following matrix, such that it can be transmitted to the I/O port to drive the motor.

mapp = {'C3':'-7','C♯3':'-7','D3':'-6','D♯3':'-6','E3':'-5','F3':'-4'

'F♯3':'-4','G3':'-3','G♯3':'-3','A3':'-2','A♯3':'-2','B3':'-1','C4':'1',

'C♯4':'1','D4':'2','D♯4':'2','E4':'3','F4':'4','F♯4':'4','G4':'5',

'G♯4':'5','A4':'6','A♯4':'6','B4':'7','C5':'8','C♯5':'8','D5':'9',

'D♯5':'9','E5':'10','F5':'11','F♯5':'11','G5':'12','G♯5':'12','A5':'13',

'A♯5':'13','B5':'14','C6':'15','C♯6':'15','D6':'16','D♯6':'16',

'E6':'17','F6':'18','F♯6':'18','G6':'19','G♯6':'19','A6':'20',

'A♯6':'20','B6':'21',}

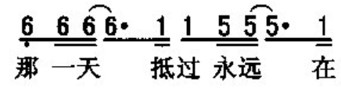

Finally, we get the following list, which refers to the note and the duration respectively.

-2 500

6 250

6 1000

1 250

1 500

5 250

5 1000

1 250

0 1000

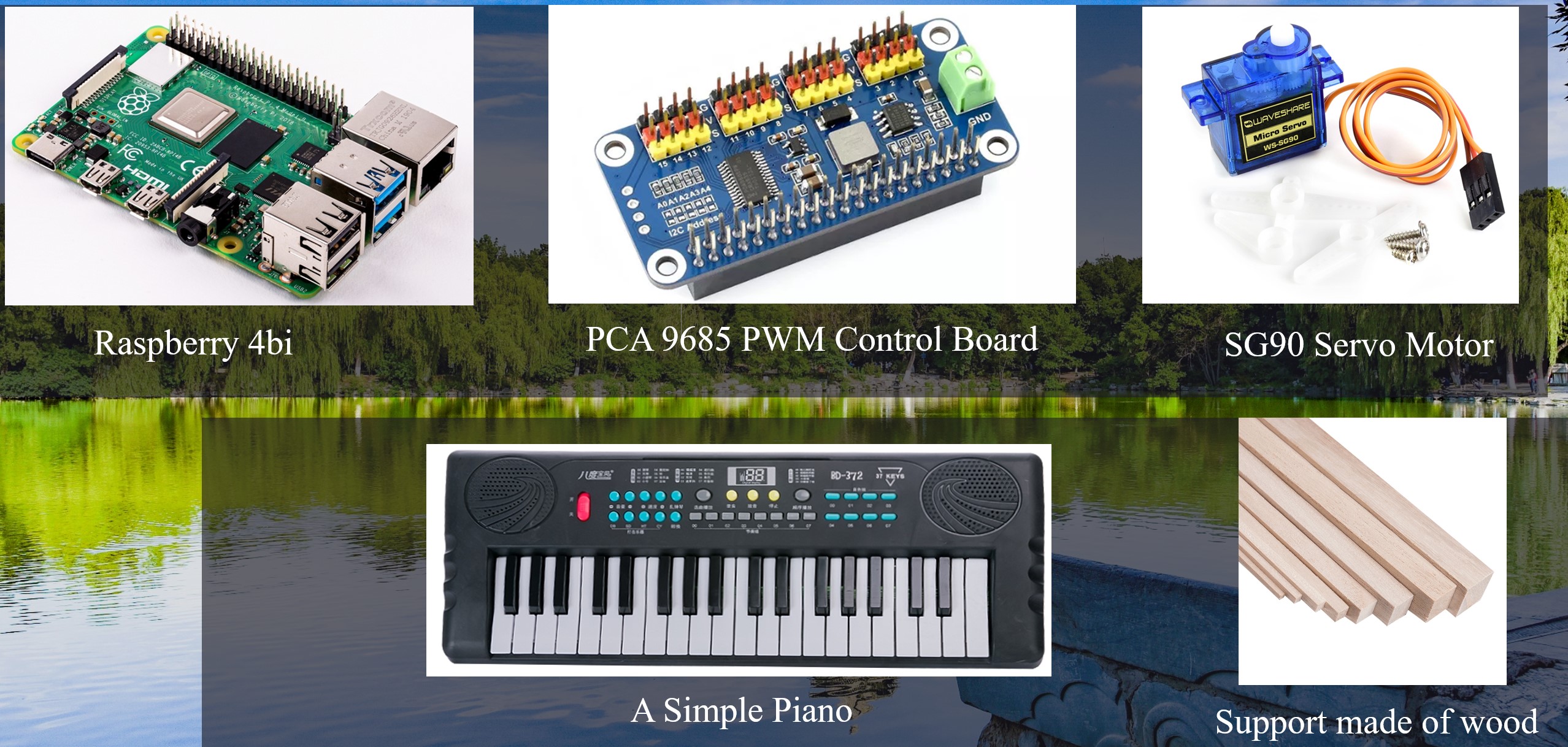

Hardware Part

System Architecture

Control Method

- The program transmits the note and duration in the software part to the I/O function.

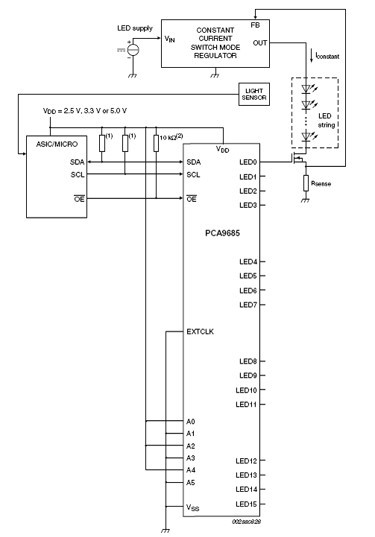

- As shown in the above figure, PCA9685 adopts \(I^2C\) protocol to communicate with Raspberry 4bi. The I/O function uses the library of PCA9685 to find the address of the connected servo motor. By configuring the duty cycle of the PWM signal, we can rotate the servo motor. The duration time can be controlled by the function

time.sleep(). - Multiple triggers are deployed on the motor, which can be used to control the keyboard.

Display

To ensure pureness and accuracy of the signal, all the audio is recorded by the *Garageband* of *Apple Inc.*.

Comment

1. Shortcomings: This project can only accurately identify very simple music, while practically much music tends to have complex rhymes, like liaison, syncopation and dots. For example, this project may take multiple notes with very short intervals as one whole note. 2. Beyond the simple spectral analysis of the audio signal, to capture more features, it is possible for us to use machine learning methods to process the spectral information. For instance, we can build classifiers for tone and rhyme, respectively.